Research Projects

Fully Attentional Networks with Self-emerging Token Labeling [Paper]

This is the project I worked on when I interned at NVIDIA. We proposed a novel training paradigm by using the self-emerging knowledge from Transformer-based models to improve the pre-training of ViTs. With only 77M parameters, our best model ranks top worldwide in the leaderboard of various datasets.

I'm grateful to work with the amazing NV team, especially my manager Jose M. Alvarez, my mentor Zhiding Yu and Shiyi Lan.

Clean-label Poisoning Availability Attacks [Paper]

In this project, we proposed a clean-label attack that compromises the model availability. We used a GAN model with a triplet loss to generate stealthy and effective poisoned data.

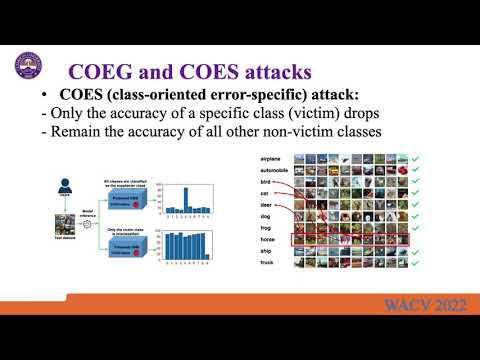

Class-oriented Poisoning Attacks [Paper]

In this project we further advanced the adversarial goal of poisoning availability to a per-class basis. We control the model prediction for each class using COEG and COES attacks.